Go is an ancient Chinese board game dating back over 2,500 years. It is a simple to learn strategy board game played on a 19×19 grid where players take turns placing a black or white stone down. If you surround all of the opponent’s stones in a group then you capture them and remove them from the board. The end goal is to control a majority of the board to win. With the rules as simple as they are the game is almost infinitely complex. The total number of possible positions for the board would be:

1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,

000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000.

That is 171 zeros if you were curious, and also is a higher number than the amount of atoms in the universe. There are so many more outcomes when compared to chess as well; if chess is considered a battle I would consider Go to be a war.

Due to the complexity of the game and vast number of possible positions it has always been difficult for AI to take on human players. Although chess, checkers, and other games have been cracked by artificial intelligence, the game Go was still eluding the best programmers out there. Google has finally made a breakthrough with AlphaGo. AlphaGo has now made AI a formidable opponent for even professional Go players. Google went into some detail about what sets their Go AI apart from the other go AI out there.

Traditional AI methods—which construct a search tree over all possible positions—don’t have a chance in Go. So when we set out to crack Go, we took a different approach. We built a system, AlphaGo, that combines an advanced tree search with deep neural networks. These neural networks take a description of the Go board as an input and process it through 12 different network layers containing millions of neuron-like connections. One neural network, the “policy network,” selects the next move to play. The other neural network, the “value network,” predicts the winner of the game.

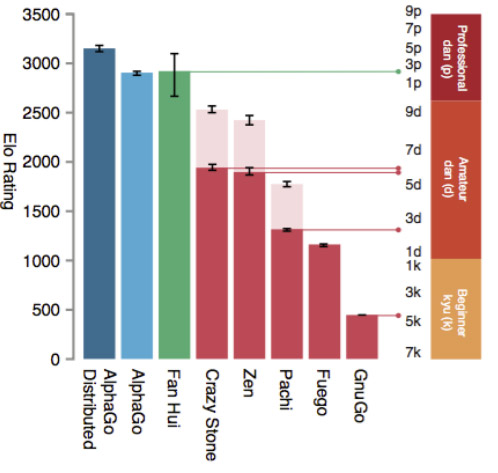

Google said they trained the neural network on 30 million moves from human players until the AI was able to predict the next move 57 percent of the time; 13 percent higher than the previous Go AI record. Because they wanted AlphaGo to excel at beating players, not just mimicking them, they had the AI play thousands of games between its neural networks to better learn how to play. Once that step was complete they had to put AlphaGo to the test. First, they held a tournament against the other top Go AI programs. AlphaGo came out of the 500 game tournament with 499 wins. Now it was time for the hard part — playing against a highly skilled human opponent. Google invited the European Go champion, Fan Hui, to their London office to challenge their new AlphaGo AI. They played 5 matches and AlphaGo won all of them. This was the first time that a computer program has ever beaten a professional Go player. Next on the AlphaGo bucket list is to play a five game challenge match against Lee Sedol in March who has been regarded as the top Go player in the world for the last 10 years.

We are thrilled to have mastered Go and thus achieved one of the grand challenges of AI. However, the most significant aspect of all this for us is that AlphaGo isn’t just an“expert” system built with hand-crafted rules; instead it uses general machine learning techniques to figure out for itself how to win at Go. While games are the perfect platform for developing and testing AI algorithms quickly and efficiently, ultimately we want to apply these techniques to important real-world problems. Because the methods we’ve used are general-purpose, our hope is that one day they could be extended to help us address some of society’s toughest and most pressing problems, from climate modelling to complex disease analysis. We’re excited to see what we can use this technology to tackle next!

With this breakthrough in artificial intelligence it will be interesting to see where Google goes next with their neural network learning system. All this news about Go is making me want to get back into playing it. I haven’t played much since the only person I used to play with moved back to Japan. If anyone is a Go player and would like to play online from time to time let me know.

What do you think of Google finally being able to make an AI smart enough to be able to beat professionals in a board game that was, prior to this, thought to be impossible? Let us know in the comments, on Facebook, Google+, or Twitter.

[button link=”https://googleblog.blogspot.com/2016/01/alphago-machine-learning-game-go.html” icon=”fa-external-link” side=”left” target=”blank” color=”285b5e” textcolor=”ffffff”]Source: Official Google Blog[/button]

Comments are closed.